While scoping out new ASA’s for a project it dawned on me that I really had no idea on where the throughput statistics that are quoted on all the marketing material Cisco has come from. You can see some of the throughput stats located on datasheets like this one: http://www.cisco.com/c/en/us/products/security/asa-firepower-services/models-comparison.html. I was unable to find anything online that showed how exactly one would calculate these stats so I ended up opening a TAC case. Here’s what TAC had to say:

Calculating Throughput

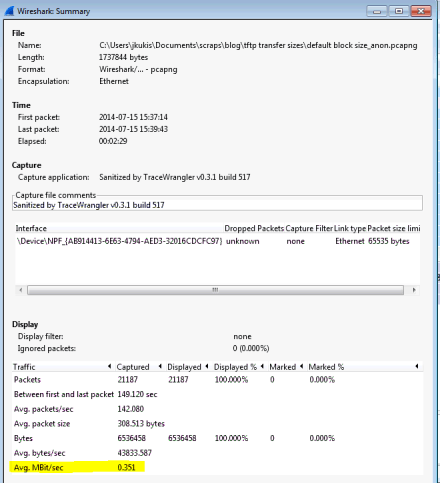

Unfortunately there is no single spot to go to see the current throughput of the ASA. You can access the stats through the use of some math and the CLI. It would be best to run this during a time where you expect your average amount of traffic to be going through the firewall, or run it when you think you will see a peak in traffic so you have a maximum throughput value to go off of.

- Login to the ASA via the CLI and run the ‘clear traffic’ and ‘clear interface’ commands to zero out the statistics. This won’t impact any traffic.

- Wait about 5 minutes for ASA to gather statistics on traffic traversing the firewall

- Run the ‘show traffic’ command

- Go to the section “Aggregated Traffic on Physical Interface”

- In that section gather the received bytes/sec and transmitted bytes/sec on all the physical interfaces (management included, internal data interfaces not included)

- Then add all the data gather received and transmitted

- Since the result is in bytes/sec, multiply the result by 8 to get it on bits/sec

- Divide the result by 1024 to get it on kbps

- Finally divide again the result by 1024 to get it on Mbps

Here’s an example of the output from the ‘Aggregated Traffic’ section of my ‘show traffic’ command, highlighting in bold the values you need to add up in step 5 and 6 above.

—————————————-

Aggregated Traffic on Physical Interface

----------------------------------------

GigabitEthernet0/0:

received (in 313.200 secs):

3974936 packets 4421004800 bytes

12691 pkts/sec 14115596 bytes/sec

transmitted (in 313.200 secs):

2504824 packets 652176414 bytes

7997 pkts/sec 2082300 bytes/sec

1 minute input rate 11450 pkts/sec, 12411522 bytes/sec

1 minute output rate 7341 pkts/sec, 1936331 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 3248 pkts/sec, 3543329 bytes/sec

5 minute output rate 2104 pkts/sec, 558594 bytes/sec

5 minute drop rate, 0 pkts/sec

GigabitEthernet0/1:

received (in 313.440 secs):

2484960 packets 646085090 bytes

7928 pkts/sec 2061271 bytes/sec

transmitted (in 313.440 secs):

4405564 packets 4352007757 bytes

14055 pkts/sec 13884659 bytes/sec

1 minute input rate 7451 pkts/sec, 1932038 bytes/sec

1 minute output rate 13124 pkts/sec, 12648429 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 2113 pkts/sec, 555686 bytes/sec

5 minute output rate 3687 pkts/sec, 3593754 bytes/sec

5 minute drop rate, 0 pkts/sec

GigabitEthernet0/2:

received (in 313.440 secs):

10315 packets 4225880 bytes

32 pkts/sec 13482 bytes/sec

transmitted (in 313.440 secs):

10961 packets 4229214 bytes

34 pkts/sec 13492 bytes/sec

1 minute input rate 26 pkts/sec, 10650 bytes/sec

1 minute output rate 29 pkts/sec, 9610 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 8 pkts/sec, 3196 bytes/sec

5 minute output rate 8 pkts/sec, 3342 bytes/sec

5 minute drop rate, 0 pkts/sec

GigabitEthernet0/3:

received (in 314.840 secs):

87198 packets 11346440 bytes

276 pkts/sec 36038 bytes/sec

transmitted (in 314.840 secs):

152634 packets 191774213 bytes

484 pkts/sec 609116 bytes/sec

1 minute input rate 111 pkts/sec, 19918 bytes/sec

1 minute output rate 158 pkts/sec, 152740 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 40 pkts/sec, 10201 bytes/sec

5 minute output rate 56 pkts/sec, 56747 bytes/sec

5 minute drop rate, 0 pkts/sec

Internal-Control0/0:

received (in 315.070 secs):

728 packets 115926 bytes

2 pkts/sec 367 bytes/sec

transmitted (in 315.070 secs):

871 packets 63736 bytes

2 pkts/sec 202 bytes/sec

1 minute input rate 2 pkts/sec, 366 bytes/sec

1 minute output rate 2 pkts/sec, 201 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 0 pkts/sec, 102 bytes/sec

5 minute output rate 0 pkts/sec, 56 bytes/sec

5 minute drop rate, 0 pkts/sec

Internal-Data0/0:

received (in 315.320 secs):

6541313 packets 5424615442 bytes

20744 pkts/sec 17203524 bytes/sec

transmitted (in 315.320 secs):

6541381 packets 5424661914 bytes

20745 pkts/sec 17203672 bytes/sec

1 minute input rate 18798 pkts/sec, 15250485 bytes/sec

1 minute output rate 18798 pkts/sec, 15250444 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 5358 pkts/sec, 4362296 bytes/sec

5 minute output rate 5358 pkts/sec, 4362296 bytes/sec

5 minute drop rate, 0 pkts/sec

Management0/0:

received (in 315.530 secs):

501 packets 67986 bytes

1 pkts/sec 215 bytes/sec

transmitted (in 315.530 secs):

51582 packets 69296696 bytes

163 pkts/sec 219619 bytes/sec

1 minute input rate 1 pkts/sec, 218 bytes/sec

1 minute output rate 157 pkts/sec, 211434 bytes/sec

1 minute drop rate, 0 pkts/sec

5 minute input rate 0 pkts/sec, 60 bytes/sec

5 minute output rate 45 pkts/sec, 61297 bytes/sec

5 minute drop rate, 0 pkts/sec

If you add up all the bold values and run through the steps above you come out with about 252Mbps, which in this case is < the 650Mbps the ASA 5540 is rated for.